Our ‘How do I’ (HDI) website was created by content designers pair-writing with store and operational colleagues. The aim was to provide operational policy information, in a way that was easy to understand, in a busy store environment.

Store colleagues rely on ‘How Do I’ to comply with legal regulations and maintain high standards of customer service. Colleagues tell us it’s useful, but difficult to find some information quickly. Our Content Design and Data Science teams worked together to test how using generative artificial intelligence (AI) and a large language model (LLM) could help.

It proved to be a great opportunity to learn from how content designers can work with teams who want to make the most of AI capability.

Taking a content design approach

As a Content Design team at Co-op, we create content that is evidence-based, user-focussed, and based on shared standards to meet our commercial goals. We want to keep these content design principles at the centre of our approach to AI generated content.

The teams designed a process that combined a Co-op built AI and a Microsoft LLM. It means that when a user enters a query, a Co-op built AI system looks at a copy of our ‘How do I’ website and finds the information that is most likely to be relevant. It takes this data and the original question, and feeds it all to a Microsoft LLM. The LLM then generates a response and passes it back to the user as an answer.

How the AI works

All of the content on the ‘How Do I’ (HDI) website was created and designed according to content design principles. As a result of the way LLMs work, without content design expertise, LLMs generate new content that is not subject to the same rigorous user-focussed design processes.

We needed to test how the AI was working to make sure it does not give misleading, unclear or inaccurate information. We analysed search data and worked with colleagues to identify the common queries they search for. This helped us to build an extensive list of test questions covering a wide range of operational, legal and safety related themes.

Testing and analysing the AI responses

When we tested the AI system with questions, we used the language our colleagues used. We asked simple questions and complex questions. We included spelling mistakes and abbreviations, then we analysed the AI system responses.

We took a content design approach and used our content guidelines to assess the responses. Validating the accuracy of responses included fact checking against the original ‘How Do I’ content to understand whether the AI had missed or misinterpreted anything.

We used this analysis to create a number of recommendations for how to improve the content of the AI responses.

Accuracy

Almost all the AI system responses provided information that was relevant to the question. But analysis showed it sometimes gave incorrect, incomplete or potentially misleading information. ‘How do I’ contains a lot of safety guidance, so to avoid risk for our colleagues, customers and business, we needed to make sure that any responses are always 100% accurate.

Accessibility

The initial AI system responses were hard to read because they were stripped of their original content design formatting and layout. Some of the responses also used language that sounded conversational, but added a lot of unnecessary words. LLMs tend towards conversational responses, which can result in content that is not accessible. It does not always get the user to the information they need in the simplest way.

Language

The AI did not always understand some of our colleague vocabulary. For example, it struggled to understand the difference between ‘change’ meaning loose coins, and ‘change’ meaning to change something. It did not understand that ‘MyWork’ referred to a Co-op colleague app. This meant it sometimes could not give relevant answers to some of our questions.

Using content design to improve the AI

Our Content Design team is now working with our data science team to explore how we can improve the AI system’s responses. We’re aiming to improve its accuracy, the language the AI uses, and reduce unnecessary dialogue that distracts from the factual answers. We’re also exploring how we can improve the formatting and sequencing of the AI responses.

This collaborative approach is helping us to get the most out of the technology, and making sure it is delivering high quality, accessible content that meets our users needs.

Based on the content design recommendations, our data science team have made changes to instructions that alter parameters for the AI, which is also known as ‘prompt engineering’. This affects the way the AI system breaks down and reformats information. We’re experimenting with how much freedom the AI has to interpret the source material and we’re already seeing huge improvements to the accuracy, formatting and accessibility of the responses.

Impact of the innovation of this AI work

“The ‘How Do I’ project has been hugely innovative for the Co-op. Not only in the use of the cutting edge technology, but also in the close cross-business collaboration we needed to find new solutions to the interesting new problems associated with generative AI. We’ve worked closely with Joe Wheatley and the Customer Products team, as well as colleagues in our Software Development, Data Architecture and Store Operations teams. We’ve been able to combine skills, experience and knowledge from a wide range of business areas and backgrounds to build a pioneering new product designed with the needs of store colleagues at its core.”

Joe Wretham, Senior Data Scientist

The future of AI and content design

AI has so many possible applications and its been exciting to explore them. This test work has also shown the critical role content design has in making sure we are designing for our users. AI can create content that is appears to make sense and is natural sounding, but the content needs to help users understand what they need to do next, quickly and easily.

Content designers understand users and their needs. This means understanding their motivations, the challenges they face, their environment, and the language they use. The testing we’ve done with the ‘How do I’ AI system shows that AI cannot do this alone, but when AI is combined with content design expertise, there are much better outcomes for the user and for commercial goals.

The content design team at Co-op have been exploring how they can balance current content design responsibilities with exploring skills and new areas for development in AI.

Blog by Joe Wheatley

Find out more about topics in this blog:

- Co-op guidelines on content design

- Co-op guidelines on accessibility

- Up-to-date information on how this work develops: Joe Wheatley, Content Designer, Operational Innovation team

- How you can get content design advice for your AI work at Co-op: Marianne Knowles, Principal Content Designer, Customer Product team

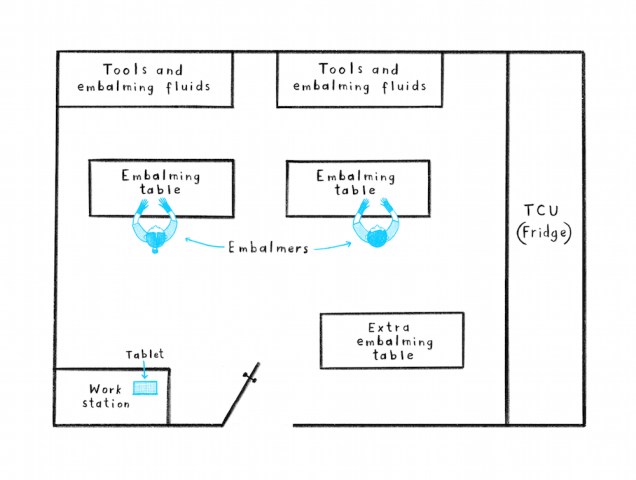

At the moment, our embalmers have tablets with their notes and instructions about how to present the deceased. They refer to them throughout the process. But colleagues tell us there are problems with this, for example:

At the moment, our embalmers have tablets with their notes and instructions about how to present the deceased. They refer to them throughout the process. But colleagues tell us there are problems with this, for example:

We:

We: